With support from the Medical Simulation & Information Sciences Research Program (MSIS) at the U.S. Army Medical Research & Development Command (USAMRDC), Vcom3D is developing authoring tools, assessment instruments, and learning system interfaces that enable the development of multi-modal (live, virtual, manikin) medical training scenarios using the Modular Healthcare Simulation and Education System (MoHSES) standards. These have the potential to lower cost and increase interoperability of medical simulation systems for multi-modal training that can be used by all services at multiple echelons of care. This project will also contribute to the open standards, open software, modules, and tools available to developers of MoHSES-compliant module and training systems. This project is funded through MTEC’s Multi-Topic Request for Project Proposals (Solicitation #17-08-MultiTopic).

Current medical simulation and training is largely conducted independently within each Service, at deployment-specific training locations, using training scenarios, devices, and performance metrics specific to each role and Service. Recent changes to military doctrine now require a Multi-Service, Joint response. In response to this change, the Department of Defense has invested in open source and open standards projects, including Modular Healthcare Simulation and Education System (MoHSES, formerly called Advanced Modular Manikin) and the BioGears Physiology Engine, which could be used to increase interoperability and lower the cost of creating new training simulations. However, there have not been tools for building simulation-based training using these open source projects. In response, Vcom3D is developing their Synthetic Training Environment for Multimodal Medical Training (STEM3T), which provides exemplar multi-service, multi-modal training scenarios, as well as the tools to create new scenarios more cost-effectively than existing platforms.

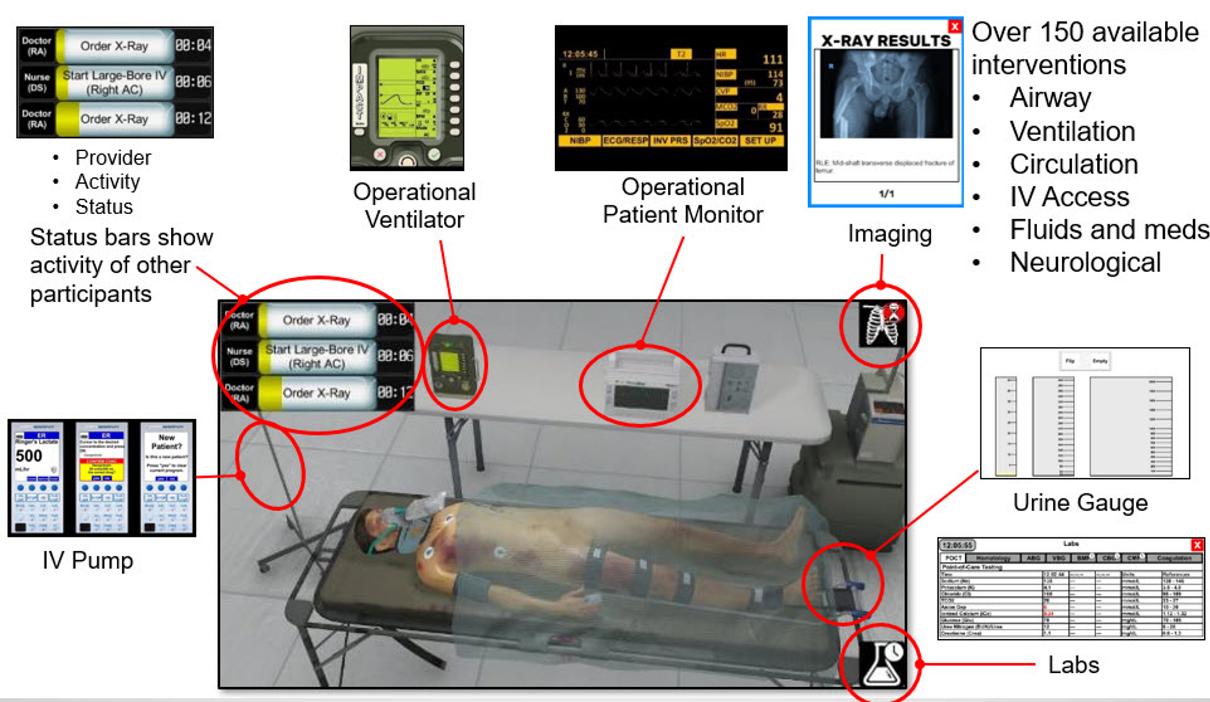

Geographically distributed learners treating a burn patient in virtual field hospital.

As part of STEM3T, Vcom3D has integrated and demonstrated a Test Bed of MoHSES-compliant live, virtual, and manikin-based simulation modules, including five virtual patient cases, a realistic medical manikin that displays appropriate physiological responses to treatments or lack thereof, and instrumented medical devices for live training exercises, which provide low-cost alternatives for simulation scenarios. The virtual patient cases include a burn victim, male and female polytrauma cases, and an infectious disease case. They are treated in medical treatment facilities ranging from point-of-injury, to austere field hospitals, to full service, stateside hospitals. The cases include a detailed physiology model with over 150 available interventions. Integrated learner assessment combines automated logging and evaluation of learner actions with instructor observations of teamwork. You can view videos of Vcom3D’s work in both military and civilian patient cases here.

Within the scope of the current project, Vcom3D will be completing and testing the scenario file standard, authoring tool, and xAPI-conformant Learning System Interface. Vcom3D will evaluate their efficacy in building open-standards, multi-modal medical training solutions. The STEM3T team is led by Ed Sims, PhD, CTO, Vcom3D. Key contributors include Dan Silverglate, VP System Architecture and Development; Rachel Wentz, PhD, Medical Simulation PI; and Doug Raum, Senior Software Developer. Carol Wideman, CEO, Vcom3D, is leading transition and commercialization efforts. Pam Latrobe is the project’s Senior Program Manager.

Each patient case can be treated using realistic field hospital or air evac equipment.

About Vcom3D:

Vcom3D develops simulation technologies and training systems to fill gaps in medical learning. Their immersive systems provide training environments for medical teams to improve decision making skills and team performance, as well as to learn or practice medical procedures. They develop authentic content using research-based behavioral and physiological models, engage the learner in immersive game play, and assess performance in realistic simulations. Learning experiences are delivered on tablets, personal computers, virtual or augmented reality displays, and instrumented physical models.